This post is a part of a serie of posts that introduce the operation of multicast routing:

- Multicast routing: A step by step

- Multicast routing: PIM dense

- Multicast routing: From the source

- Multicast routing: Hold ASM for a moment

- Multicast routing: Rather than dense, sparse it and let’s meet at rendez-vous point

- Multicast routing: RPF

We explore in this post, the operation of PIM multicast routing protocol in the by default operation mode PIM Dense mode. The idea is very simple: flood the network with multicast trafic and then prune it is no client willing it…

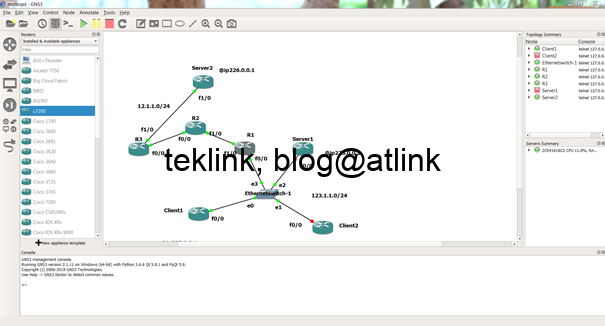

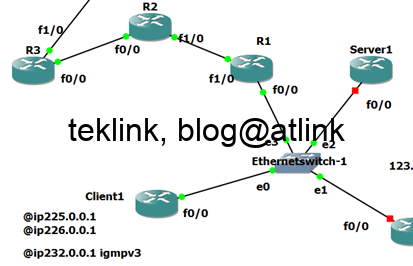

Server2 (the source of our multicast trafic: pings to group 226.0.0.1 group) is 3 hops away from its receivers: client1 and 2 (members of 226.0.0.1 multicast group willing to receive this trafic from the Server2)

bring up the multicast setup

The network setup is shown in the next figure:

R3 receives the multicast trafic and forwards it to its forwarding list interfaces (that are facing the clients downward). The router command show ip mroute shows an entry for this flow in format (S,G) sourced from 12.1.1.254 and toward the multicast group 226.0.0.1. This flow is flagged T (SPT-bit set) received from interface FastEthernet1/0 (facing the source of trafic) and forwarded to FastEthernet0/0 (facing the clients) in Forward state in Dense mode operation.

At R2 (one hop downward from the source towards the clients), the entry for the S comma G is present but the incoming interface list is not accurate (does not reflect what we expect as operation) and the outgoing interface list is populated by all interface in Forward state in Dense mode operation.

At R2 the outgoing interface list includes also the incoming interface! The incoming interface list is Null and the RPF neighbor indication is incorrect.

We check that no route exists at R2 to reach the source. This may explain why the RPF (Reverse Path Forwarding) fails…

We activate a debug ip multicast routing, and check that RPF (Reverse Path Forwarding) fails; this confirme the show command on ip cef done before.

Let’s configure a static route to Server2 and check that fowarding towards the source is accurate.

Now the mroute table is populated with a correct information… the Incoming interface points towards FastEthernet0/0 and indicates as RPF neighbor the nexthop of the static route entered earlier.

We do the same thing on R1 and check the mroute information about 226.0.0.1 multicast group

We check that Server1 is receiving now replies to its pings from Client1

In this lab PIM is configured in dense mode (which is the mode by default, the other mode is Sparse discussed in further posts in the same serie)

At R2, the output of the show ip pim interfaces thus the interfaces that are enable for PIM operation, shows that both interfaces FastEthernet0/0 and 1/0 are enabled for PIM v2 operation in Dense mode. The command shows also the DR (designated router).

At R3, we check that the information is coherent between routers (R2 for example). R2 sees that R3 is DR on FastEthernet1/0; the same information is confirmed by R3.

We recall that PIM is our multicast routing protocol that helps us deliver multicast trafic in a network similar to our lab setup (where routing is necessary because the server is not located in the same L2 or broadcast network as the clients)

The dense variant of PIM floods first the server trafic multicast domain wide (all routers and corresponding interfaces configured for PIM) and prune the paths or interfaces that are not willing to receive it.

prune and flood of PIM in dense mode

Let’s check the flood and prune behavior of PIM dense mode

Client1 is no more interested in 226.0.0.1. This is confirmed by the command show ip igmp memebership: no entry is recorded…

How R1 would react? when we add new memberships…

To see PIM into run we enable a debug ip pim

We check the IGMP active memebership using the command show ip igmp memebership.

We notice the the 226.0.0.1 in IGMP table is flaged as S for static and A for aggregate

The field Exp. Which indicates the status of the tracking shows that Client1 has stopped joining the group

For Test we reconfigure Client1 to join the group and the result is show in the next output:

The Exp. field downcounter is enabled again (count 3 minutes)

As soon as we stop IGMP (static or dynamic) memberships, R1 inserts a prune in RPF neighbor queue which cause the Exp. (Expire) field to stop.

In the mroute table the entry is flagged as P, which indicates that it’s pruned

Additionnally the outgoing interface list is changed to Null (because Client1 was the last client willing this trafic)

If Client1 joins again, R1 builds a message for its PIM RPF neighbor to ask for group multicast and populates the outgoing interface with the corresponding interface.

as a conclusion

as a conclusion we’ve checked in this post the basic operation of PIM multicast routing protocol in Dense mode from the client perspective. In the next posts we’ll deepen this understanding and continue to explore all the possibilities the CLI (command line) show and debug commands allow to configure and troubleshoot multicast…