in this post let’s detail the operation of PIM (Protocol Independent Multicast) in sparse mode. Previous posts tackled the operation of PIM dense mode. Let’s recall that PIM is the multicast routing protocol that allows PIM routers exchange information needed distribute multicast traffic to receivers.

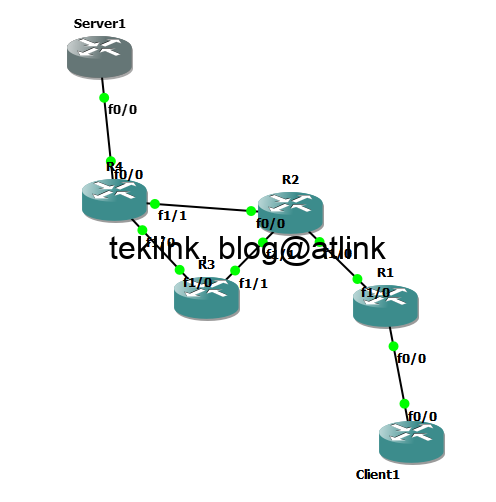

lab setup

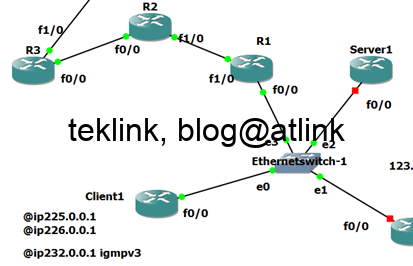

In our setup, Client1 is configured to join multicast destined for group 226.0.0.1 and sent from Server1. Server1 is five hops far from Client1 and this is why we’re required to use the multicast routing protocol (PIM).

Instead of use PIM-Dense (flood and prune), seen in the previous post of this serie on Multicast operatoin, we use PIM-Sparse to build the forwarding path from the server to the client.

PIM-Sparse

PIM-Sparse is enabled on R1, R2, R3 and R4 routers

RIP for unicast routing (and RPF)

We use RIP for unicast routing and multicast a ping from the source for test purpose toward the group of receivers

IGMP

At R1 we check first that Client1 is registered to the group which is the case

The interface of router R1 facing Client1 is configured for PIM-Sparse

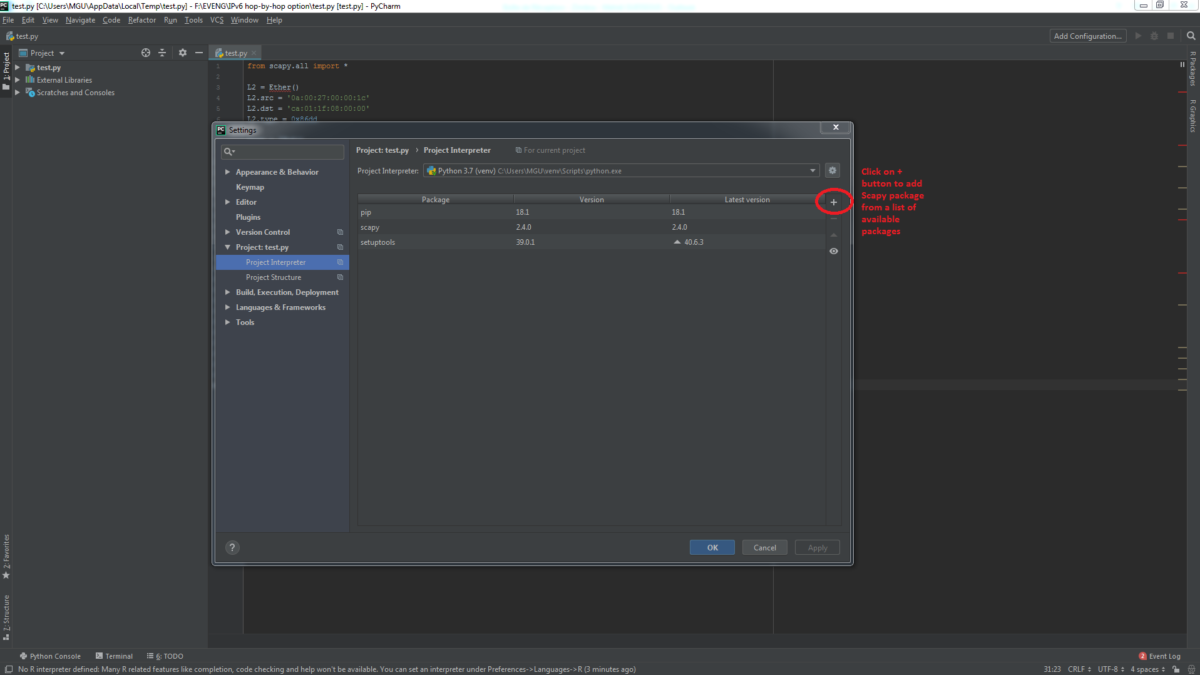

enable PIM in sparse mode

The details of this PIM interface are given in the following figure. We can check that PIMv2 is enable at interface level in sparse mode, that the RP or Rendez-vous point for this mode is the router itself (R1). We have also counters for PIM neighbors, in/out multicast packets. We check also that multicast switching is fast (at hardware level) and not process switched (at CPU level).

All interfaces are configured for PIM-Sparse mode of operation

PIM neighborship

We check PIM neighborship established between R2 and R1 using the command show ip pim neighbor. We could check that now R2 is the DR (designated router) of this multicast link.

Now that PIM is enabled and neighborships established, let’s enable multicast routing and check the mroute table for effective multicast traffic forwarding

mroute table

After a while the mroute table get populated by group 226.0.0.1 information. From the show ip mroute command output, we check the R1 is the RP for this group (marked as 0.0.0.0) that outgoing interfaces for this group are populated with fa0/0 towards Client1 as receiver. But nothing is the list of the incoming interface (Server1 has not yet sent any traffic). The RPF check is what guarantees the the source IP is resolvable the the same interface using our unicast routing protocol (in our case connected or RIP)

The RP Rendez-vous Point is set to local

source trafic

Let’s send trafic from the server

At R4,

The router receives trafic from the source but fails routing it

The mroute table is empty of 226.0.0.1 group information

debug multicast forwarding

We activate PIM on the fa0/0 interface (to enable multicast packets processing) and run a debug ip mfib pak

A debug on R1 shows the packet from the server fails the acceptance check. Is it due to sparse mode? Or in general? We put the interface into dense mode

the debug shows the output in the following figure. The flow is now accepted but fails because of no forwarding interface.

We configure back the interface into sparse mode

In addition, we configure R4 to be a RP (static) instead of R1.

Now the packet is accepted for forwarding

But because of no forwarding interface the packets are still dropped

We check also that R4 initiates the register process with the RP (which is R4 in our case)

At R4,

The mroute table is populated by (S,G) entry but it is pruned because of the outgoing interface list is empty

Let’s configure the RP on the other routers to match R4

At R1 as soon as we configure the RP address to match R4

We check that R1 build a join message for 226.0.0.1 and put it in R2 queue

At R2,

The router has no idea about RP and consequently invalidates the join received from R1

We configure the RP information on the remaining routers R2 and R3

Now the server is getting response from the client to the ping sent from the server to the multicast group

At R3 the mroute table shows

The RP information is correct and the RPF is set to R4 on the fa1/0 interface

rpf operation

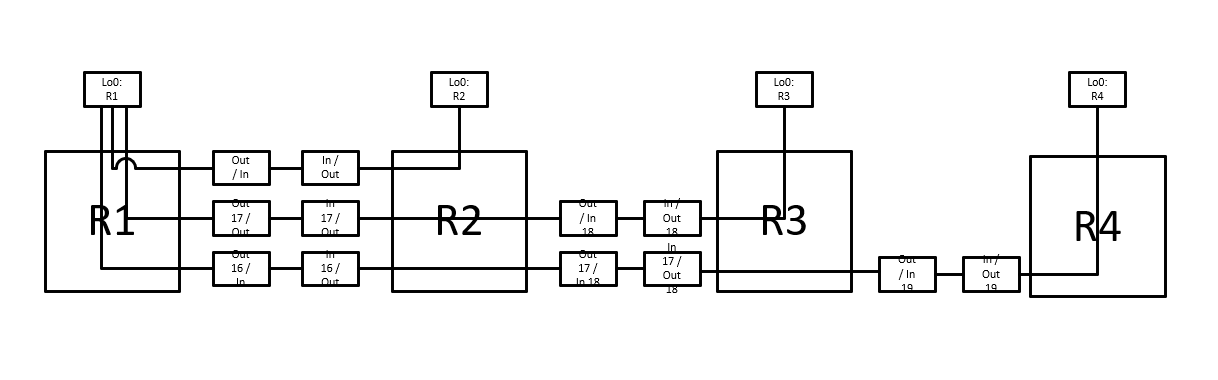

At R2 we add a new link to R4 so that we create a shorter path from R4 to R2

problem

The ping from the server stop working

Any idea?

as conclusion

in this post we’ve checked the operation of PIM in sparse mode. The configuration of the RP is central to this mode of operation. From there all PIM routers build the forwarding tree toward the RP (using RPF rule to validate PIM exchanged messages). After this half of path is built, the second path is build from the server to the RP to join the first half of path already built. RPF is also in action to check that the source of the multicast traffic is also in the RPF path in terms of the unicast routing table.